SegBuilder: A Semi-automatic Annotation Tool for Segmentation

Date: February 27, 2025

SegBuilder is a web-based tool that leverages unsupervised segmentation models for semantic segmentation annotation.

Relevant Papers and Presentations

We are presenting our peer reviewed paper at WACV 2025. We will also be showing a poster.

Our student collaborators, Sean Chen, Sameer Chaudhary, and Jacob Elafros also presented a poster at 2024 CCSC Central Plains where they won the Best Student Poster Award.

The code for SegBuilder is available on GitHub.

SegBuilder: A Semi-Automatic Annotation Tool for Segmentation

Md Alimoor Reza, Eric Manley, Sean Chen, Sameer Chaudhary, Jacob Elafros

Proceedings: Proceedings of the Winter Conference on Applications of Computer Vision (WACV)

Date: 2025 Feb | Pages: 8483-8492Reza, M. A., Manley, E., Chen, S., Chaudhary, S., & Elafros, J. (2025). SegBuilder: A Semi-Automatic Annotation Tool for Segmentation. Proceedings of the Winter Conference on Applications of Computer Vision (WACV), 8483–8492.@inproceedings{reza_wacv2025, author = {Reza, Md Alimoor and Manley, Eric and Chen, Sean and Chaudhary, Sameer and Elafros, Jacob}, title = {SegBuilder: A Semi-Automatic Annotation Tool for Segmentation}, booktitle = {Proceedings of the Winter Conference on Applications of Computer Vision (WACV)}, month = feb, year = {2025}, pages = {8483-8492}, }This paper addresses the problem of image annotation for segmentation tasks. Semantic segmentation involves labeling each pixel in an image with predefined categories, such as sky, cars, roads, and humans. Deep learning models require numerous annotated images for effective training, but manual annotation is slow and time-consuming. To mitigate this challenge, we leverage the Segment Anything Model (SAM) – a vision foundation model. We introduce SegBuilder, a framework that incorporates SAM to automatically generate segments, which are then tagged by human annotators using a quick selection list. To demonstrate SegBuilder’s effectiveness, we introduced a novel dataset for image segmentation in underwater environments featuring animals such as sea lions, beavers, and jellyfish. Experiments on this dataset showed that SegBuilder significantly speeds up the annotation process compared to the publicly available tool, Label Studio. SegBuilder also includes a free-form drawing tool, allowing users to create correct segments missed by SAM. This feature is particularly useful for scenes with shadows, camouflaged objects, and part-based segmentation tasks where SAM falls short. Experimentally, we demonstrated SegBuilder’s efficacy in these scenarios, showcasing its potential for generating pixel-wise annotations crucial for training robust deep learning models for semantic segmentation.SegBuilder: Semi-automatic Annotation for Semantic Segmentation (Best Student Poster Award)

Sean Chen, Sameer Chaudhary, Jacob Elafros, Eric Manley, Md. Alimoor Reza

Conference: Consortium for Computing Sciences in Colleges Central Plains Regional Conference

Date: 2024 AprChen, S., Chaudhary, S., Elafros, J., Manley, E., & Reza, M. A. (2024, April). SegBuilder: Semi-automatic Annotation for Semantic Segmentation (Best Student Poster Award). Consortium for Computing Sciences in Colleges Central Plains Regional Conference.@conference{chen_ccsc2024segbuilder, address = {Lamoni, Iowa}, author = {Chen, Sean and Chaudhary, Sameer and Elafros, Jacob and Manley, Eric and Reza, Md. Alimoor}, booktitle = {Consortium for Computing Sciences in Colleges Central Plains Regional Conference}, month = apr, title = {{SegBuilder}: Semi-automatic Annotation for Semantic Segmentation (\emph{Best Student Poster Award})}, year = {2024}, }

Summary

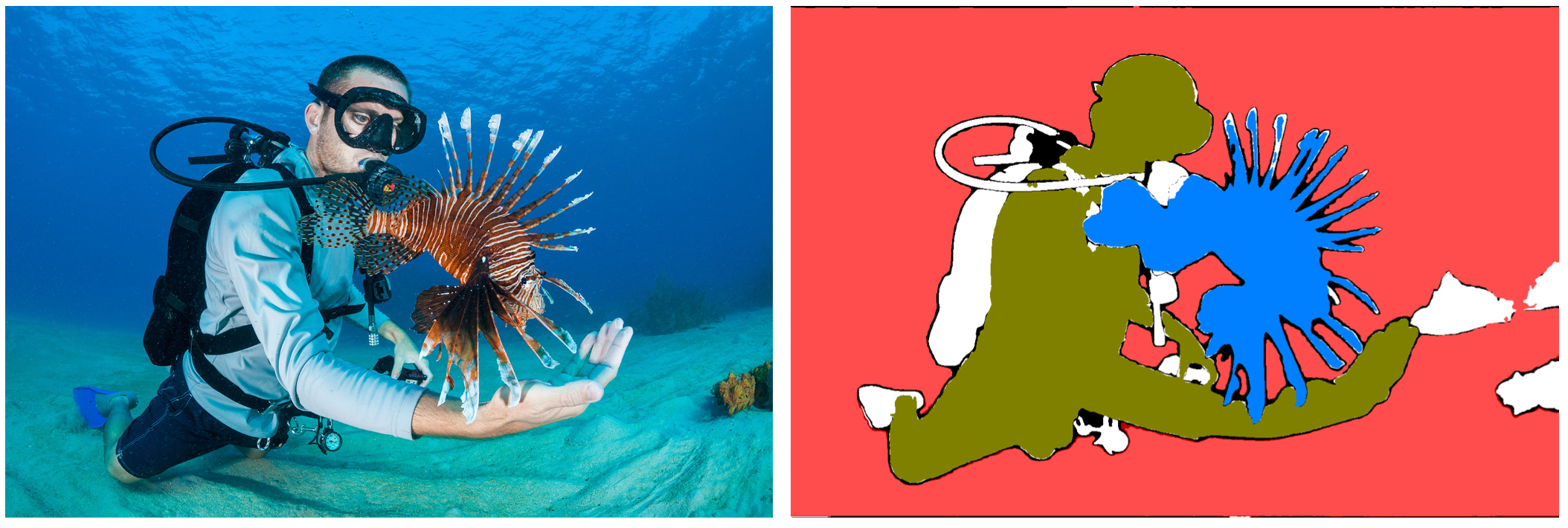

Segmentation is a computer vision task which attempts to identify which portions of an image belong to different objects. For example, the image on the left shows a scuba diver interacting with a lionfish. If we painted over different parts of the image with different colors, we might produce segments like those shown on the right.

Segmenting an image

Unsupervised Segmentation

A computer vision model that attempts to automatically produce segments like these without applying any meaning to them is called an unsupervised segmentation. Such a model might try to distinguish that some pixels belong to one object and some belong to a different object, but it does not attempt to apply labels to those parts, like “human” or “lionfish”.

There are some very good unsupervised segmentation models that work on general images, like Segment Anything (SAM), an open model released by Meta AI.

An image segmented by Segment Anything from https://github.com/facebookresearch/segment-anything

Models like this are good at recognizing when objects are different from one another, but since they do not need to be able to say what those objects are, they can be trained on general image data. It might be able to tell which pixels belong to the lionfish even though it was never trained on any examples of lionfish or even underwater images at all.

Semantic Segmentation

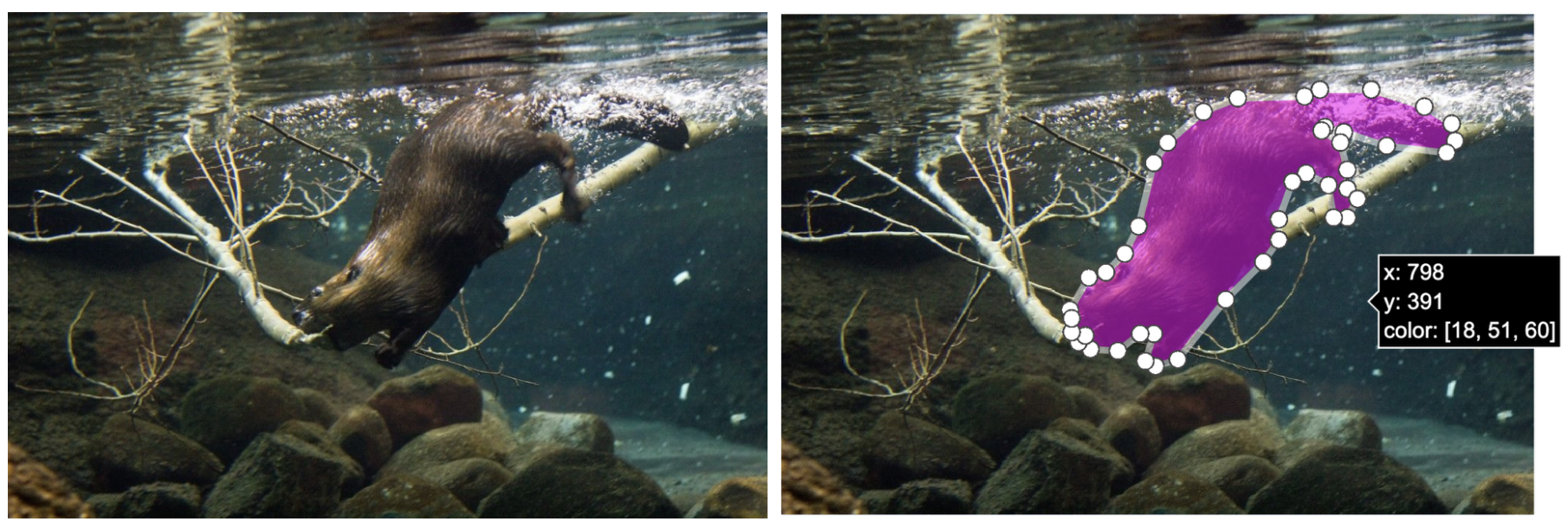

Semantic Segmentation is a task in which the image must be segmented into different objects and those objects need to be assigned lables like “human” or “lionfish”. Different applications usually need to be trained on human-annotated images that are specific to the needs of that application. Vision models for self-driving cars need to have labeled segmentation examples for vehicles, pedestrians, lanes, etc. from the perspective of a camera mounted on the car. A model for finding bone fractures in X-ray imagery would need to have lots of labeled segments for different bone X-rays with and without the various kinds of fractures that it needs to detect. Creating the datasets needed to train these models can be extremely slow and labor-intensive, and it usually involves having humans draw polygons on hundreds or thousands of example images.

Drawing a polygon around a beaver to create dataset for analyzing underwater images

SegBuilder

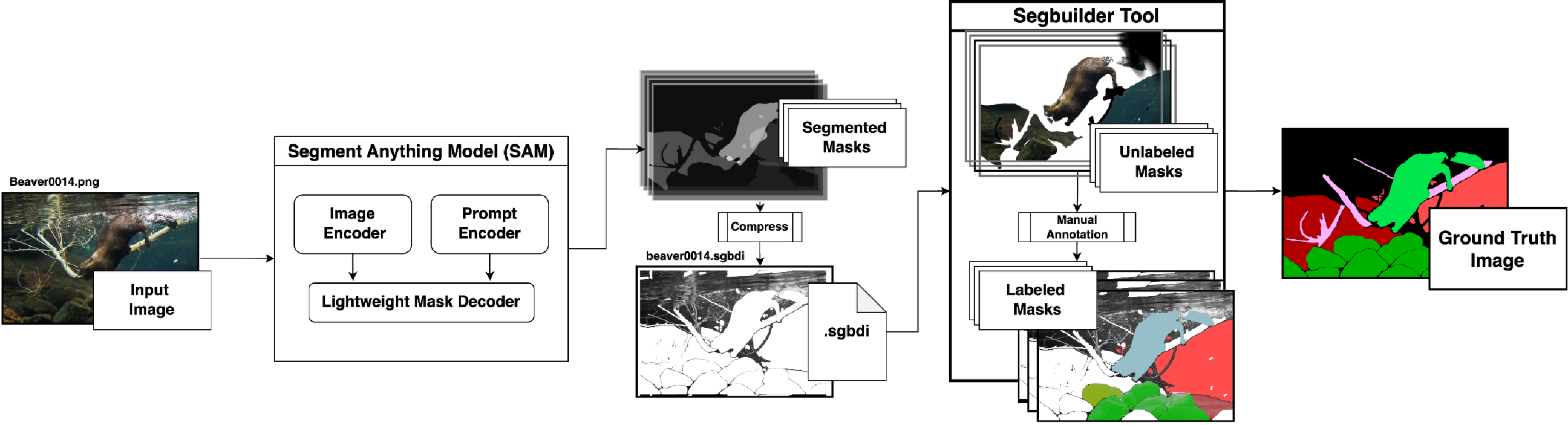

SegBuilder uses an unsupervised model like SAM to produce a starting point for creating datasets that can be used for training semantic segmentation models. SegBuilder presents each starting segment as an image mask that the annotator can perform various actions with.

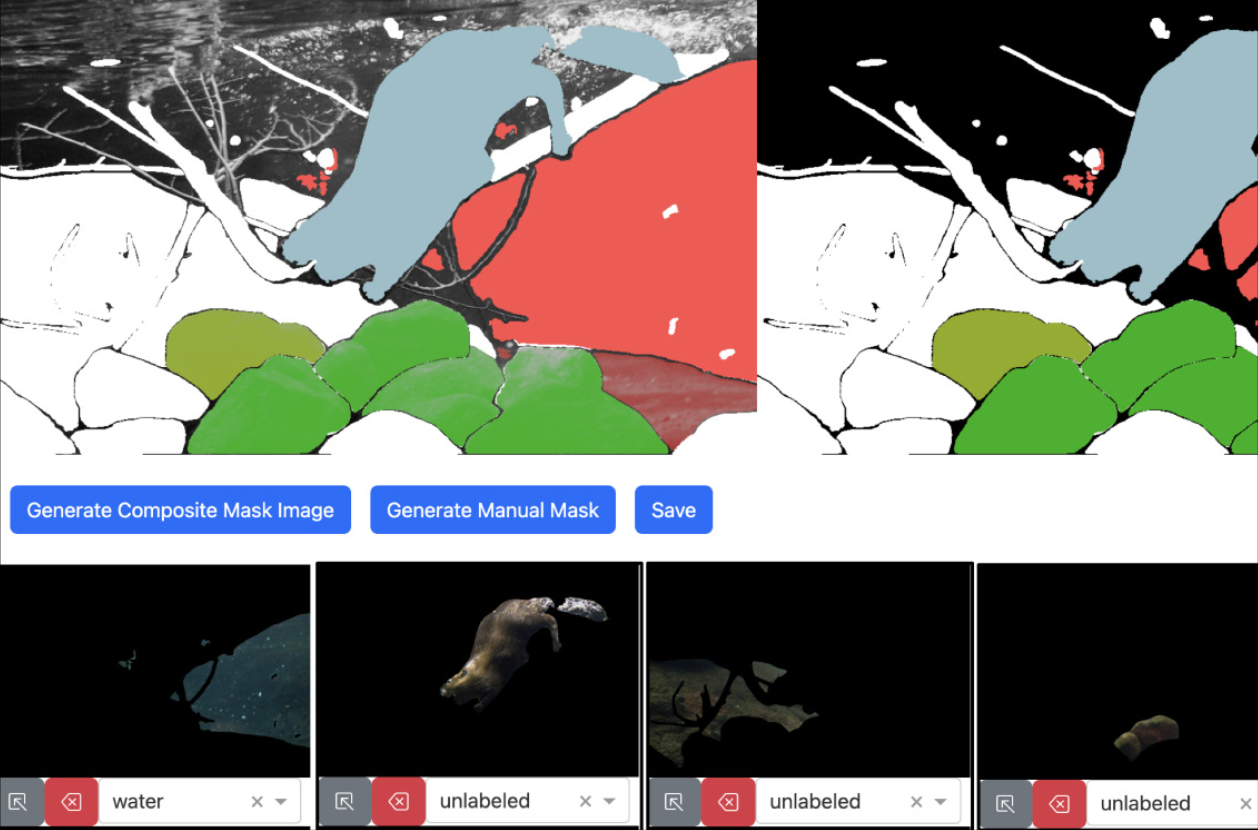

A SegBuilder workflow

In many cases, segments produced by the unsupervised model might perfectly match one of the semantic categories of interest. In this case, the annotator can simply select the appropriate label from a dropdown menu.

SegBuilder labeling

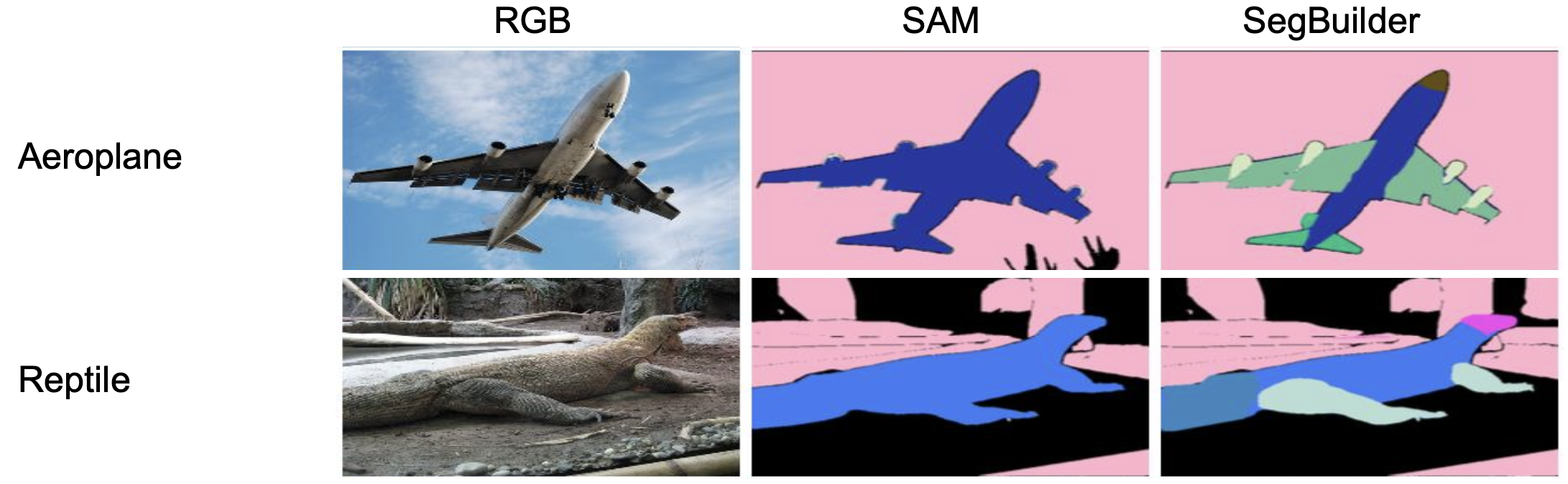

However, there are many cases in which the unsupervised model does not accurately capture the intended phenomena. For example, the annotator may be interested in labeling parts of an object that the unsupervised model tends to group together as one big object.

Part Segmentation

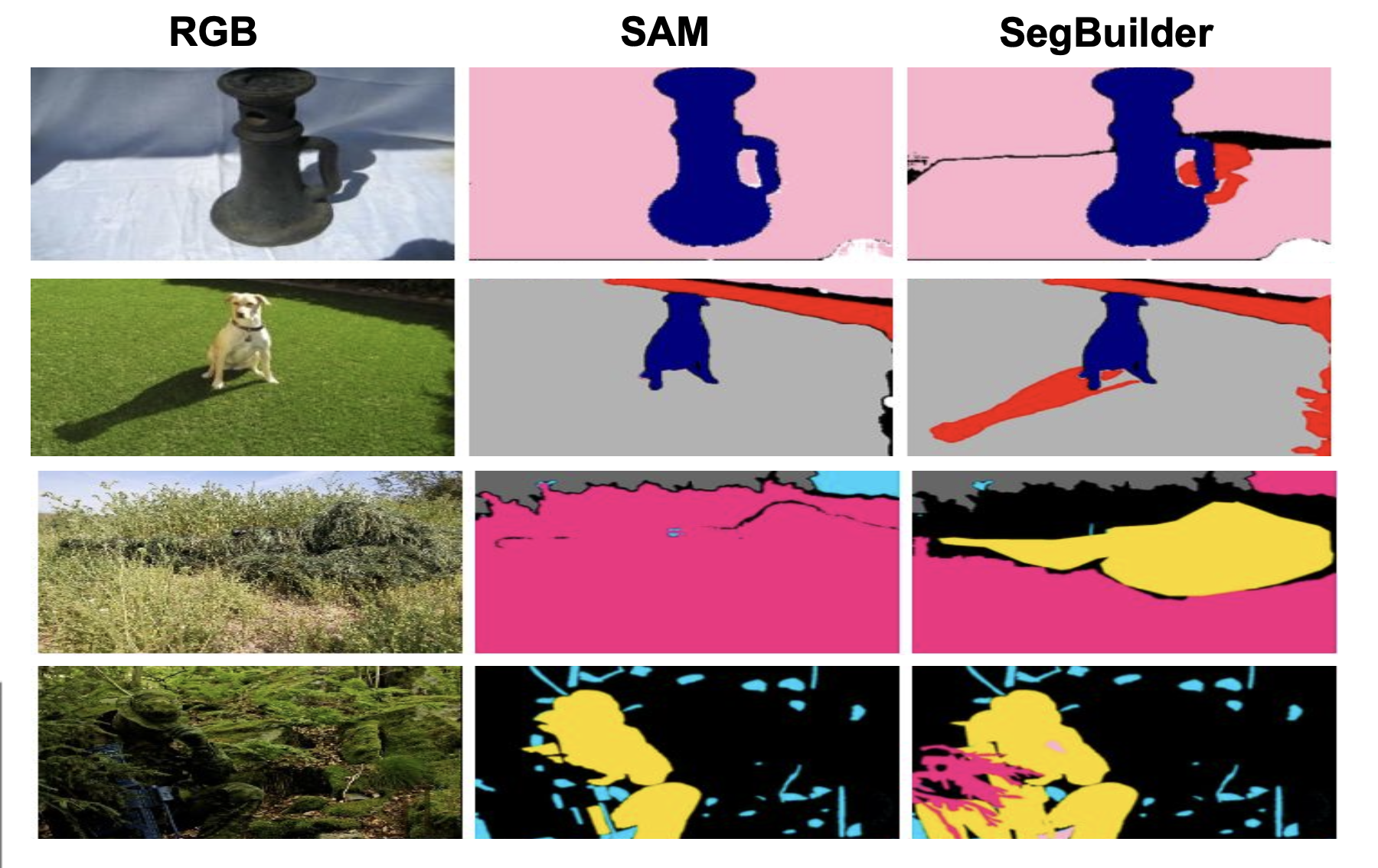

In other cases, the unsupervised model misses important things like shadows or camouflaged objects.

Part Segmentation

For these cases, SegBuilder allows for new segments to be drawn by the annotator using a natural drawing tool. And, annotators can copy, edit, and adjustment the polygons for any segment produced by the unsupervised model.

Evaluation and Results

We performed an experiment in which four annotators were tasked with producing datasets for a collections of underwater images with 31 different categories of interest. The annotators first annotated the images using SegBuilder and then using another popular polygon-drawing annotation tool, and we found that SegBuilder resulted in a 3.3x speed-up.

We also peformed experiments with a shadow and camouflage dataset as well as a fine-grained parts dataset and demonstrated a qualitative improvement when compared to SAM-only labeling.