Getting Started with Large Language Models for the CS Curriculum

Date: April 15, 2024

A workshop introducing Large Language Models and the Hugging Face NLP libraries for CS educators wanting to include LLM content in their curriculum, either as a standalone course or integrated into existing courses.

In Fall of 2023, I taught a course on Natural Language Processing in which I covered modern techniques relevant for Large Language Models like attention and transformers with applications to summarization, translation, classification, and conversation. I utilized Hugging Face models and datasets as well as their transformers library for Python. It was an insightful learning experience that I wanted to share with other CS educators, so I gave a workshop introducing these topics at the CCSC Central Plains 2024 conference with a basic introduction into transformers and the Hugging Face library, which included discussion on how it could be integrated into various places in a typical CS curriculum.

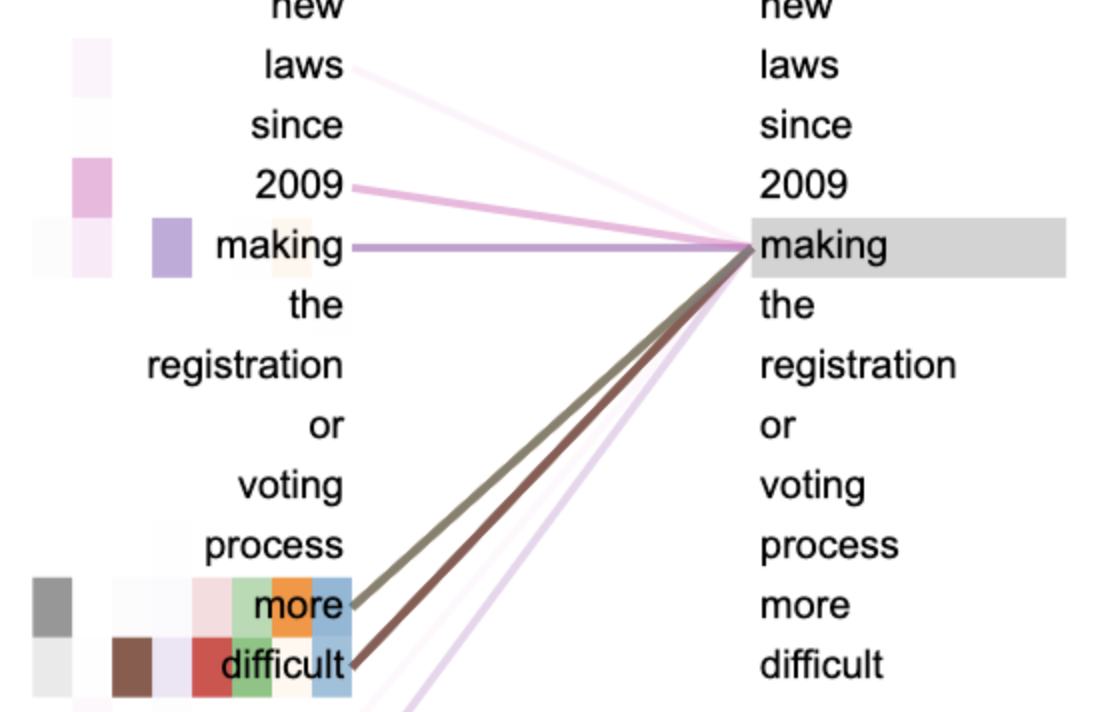

The cover image is from the Attention Is All You Need paper, which introduced the transformer architecture.

Materials

I presented slides and sample code using a Jupyter notebook and have shared them here: